1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

| import numpy as np

import torch

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import torchvision

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

train_loader = torch.utils.data.DataLoader(

datasets.MNIST(root='./data',

train=True,

download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=64, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=64, shuffle=True)

def imshow(img):

img = img / 2 + 0.5

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

dataiter = iter(train_loader)

images, labels = dataiter.next()

imshow(torchvision.utils.make_grid(images))

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 6, 5)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 4 * 4, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), 2)

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:]

num_features = 1

for s in size:

num_features *= s

return num_features

net = Net()

print(net)

image = images[:2]

label = labels[:2]

out = net(image)

print(out)

criterion = nn.CrossEntropyLoss()

loss = criterion(out, label)

print(loss)

optimizer = optim.SGD(net.parameters(), lr=0.01)

image = images[:2]

label = labels[:2]

optimizer.zero_grad()

out = net(image)

loss = criterion(out, label)

loss.backward()

optimizer.step()

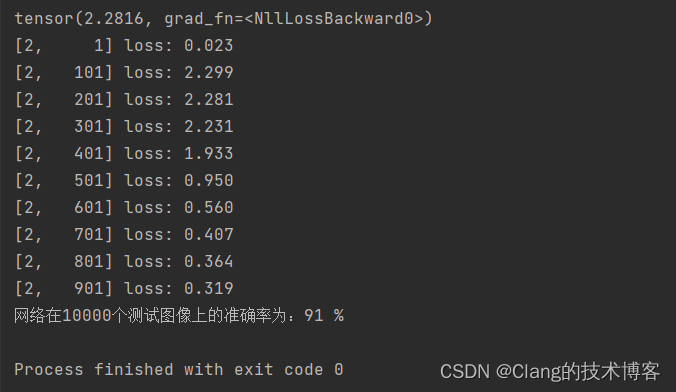

def train(epoch):

net.train()

running_loss = 0.0

for i, data in enumerate(train_loader):

inputs, labels = data

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 100 == 0:

print('[%d, %5d] loss: %.3f' %

(epoch + 1, i + 1, running_loss / 100))

running_loss = 0.0

train(1)

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('网络在10000个测试图像上的准确率为:%d %%' % (100 * correct / total))

|